Xiaomi released its embodied large model, MiMo-Embodied, and open-sourced it

2025-11-23 01:52:00+08

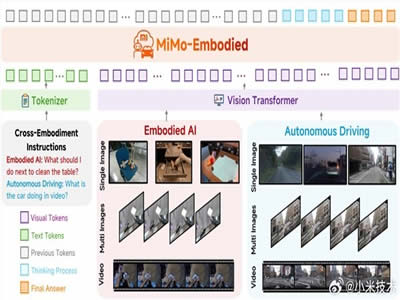

Xiaomi has officially unveiled its embodied large model, MiMo-Embodied, and announced that the model will be fully open-sourced. This move marks a significant step forward for Xiaomi in the field of general-purpose embodied intelligence research.

As embodied intelligence gradually takes root in home environments and autonomous driving technologies achieve large-scale deployment, a critical industry challenge has emerged: how can robots and vehicles better integrate cognitive and operational capabilities? Can indoor task intelligence and outdoor driving intelligence mutually reinforce each other? Xiaomi’s newly released MiMo-Embodied model is specifically designed to address these challenges. It successfully bridges the domains of autonomous driving and embodied intelligence through unified task modeling—making a pivotal leap from “domain-specific models” to “cross-domain capability synergy.”

MiMo-Embodied features three core technical innovations:

-

Cross-domain capability coverage: The model simultaneously supports the three fundamental tasks of embodied intelligence—affordance reasoning, task planning, and spatial understanding—as well as the three key tasks of autonomous driving—environmental perception, status prediction, and driving planning—providing robust support for full-scenario intelligence.

-

Knowledge transfer between domains: MiMo-Embodied demonstrates a synergistic effect in transferring knowledge between indoor interaction capabilities and on-road decision-making, offering a novel approach to cross-scenario intelligent fusion.

-

Multi-stage training strategy: The model employs an advanced training pipeline—“embodied/autonomous-driving capability learning + CoT (Chain-of-Thought) reasoning-enhanced RL fine-tuning”—which significantly improves deployment reliability in real-world environments.

In terms of performance, MiMo-Embodied sets a new benchmark for open-source foundation models across 29 core evaluation benchmarks spanning perception, decision-making, and planning—outperforming all existing open-source, closed-source, and specialized models.

- In embodied AI, it achieves state-of-the-art (SOTA) results on 17 benchmarks, redefining the boundaries of task planning, affordance prediction, and spatial understanding.

- In autonomous driving, it excels on 12 benchmarks, delivering end-to-end breakthroughs in environmental perception, status prediction, and driving planning.

- Moreover, in general vision-language understanding, MiMo-Embodied demonstrates exceptional generalization ability, strengthening its foundational perception and reasoning skills while achieving significant performance gains across multiple key benchmarks.

Open-source repository:

https://huggingface.co/XiaomiMiMo/MiMo-Embodied-7B